About This Service

Hi,

I am Freelancer with more than a 1.5 years of experience with latest trending tools in Software Testing domain. I have been working as SDET (Software Development Engineer in Testing) known as Test Automator.

I worked more and got great experience in testing with these trending domains like E-Commerce, ERP (Enterprise Resource Planning) and also, Digital Marketing. These type of trending online website I do well.

Work Experience:

I had been working as a Software Automation Test Engineer since more than couple years to till today. I have a great product knowledge in the different type of domains which are trending now like ERP, E-Comm, DigMarketing, etc,..

I have a close relationship with "Selenium web Driver" which is Great Loving Open Source Tool for Test Automators like me.

While doing automation we have different types of Framework processes to follow. Here mostly I used Data Driven Framework for that projects as per the client suggestion. and also I developed some of the scripts using Hybrid Framework (Module Driven with TestNG).

And recently I started work "Behavioral Driven Development Framework" using "Cucumber" with "Maven Project " it's very nice when compared to previous frameworks and it's especially for the client base to understand script easily. so it would be better in future implementations, and trending one.

Here I can also provide Web-Service Testing using with "Groovy" and SoapUi for all Soap Based and Rest Based services by using Web Service Description Language and Web Application Description Language (WSDL & WADL service URLs)

While doing test Automation, developing scripts I used different types of tools and plugins to Automate any type of websites developed by using HTML, Java, .Net, PHP, Ajax, Flux, Non-HTML Pages and Also Windows Based Application (1 Tire, 2 &3& N -Tire App's ) to automate these type website most of the times I worked on these technologies like Selenium, Java-Robot, Sikuli, Actions, Javascript, Maven, TestNG, Cucumber, Java Oops, Keyboard functions, SoaupUI, Groovy,etc..

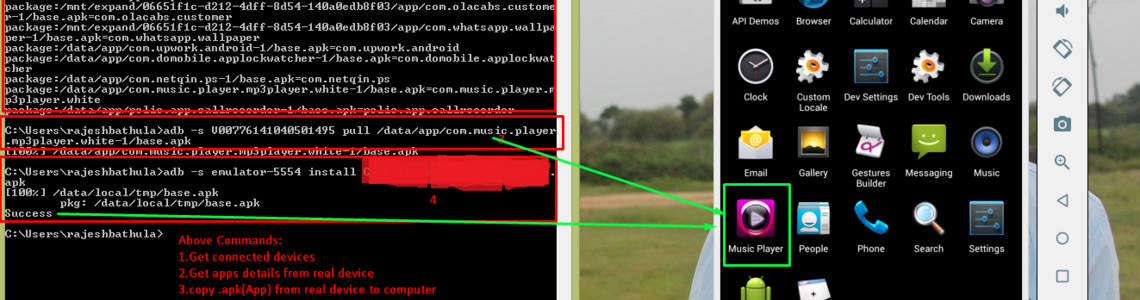

I have good experience using Appium for Mobile .apk Automation Testing, Here I can create Virtual Android Devices to Automate your .apk (mobile application) with latest API Level (latest android versions technologies like 7.0,6.0,5.0,.. ) and same execution with Real Device by using Appium Server and UI Automator Viewer I will automate your .apk file, And good experience launching application form computer to mobile or mobile to computer or server to mobile device using commands I can install your .apk into mobile from the server, using UI Automator Viewer Perform the Testing.

I can also provide details log report for both Website Testing, .apk Testing

Work Style:

Based on the website and client need,

I will create test suites to cover all functionalities for each of the suite (with successive steps).using Selenium, Java, Sikuli, etc,..codes I' will implement by following exactly step by step each action made into the system,

Each test we can able to run individually with respective module wise or I can also write a script for build file to run the entire project by the single click, at this time I'll follow client suggestion with their need, make the specific action in order to cover all the functionalities of the system.

All the developed scripts we can run for retesting, regression testing, and it can be easy to update when new enhancements will come or any changes needed in existing scripts it would be easy and understandable to the new one.

Preferred and well-Versed technologies :

Eclipse Java, Intelli-J

Selenium Web Driver, Sikuli, Cucumber, BDD

TestNG, Maven, Jenkins, Appium, SoaupUI, Groovy

- Quality Testing

- Layout and Visual Design

- Navigation & Information Architecture(Flow of Project)

- Writing & Content Quality

- Output LogFiles through emails

- ErrorLog

- Detailed documents for work efforts andtracking details

Feel free to contact me with any questions before Buy an Hourlie